Working with multiple files

Collected data may be available in a single file or maybe not. So, we should know how to read multiple files and later combine them so that analysis can be done on a complete data set.

Way 1:

As shown in the previous article, you can read your files by pd.read functionality. Consider we have multiple files of multiple extensions, we can read like this:

dataset_1 = pd.read_csv(“data1.csv”, sep = “,”) # Suppose it has 100 rows and 15 columns

dataset_2 = pd.read_csv(“data2.csv”, sep = “,”) # 100 x 15

dataset_3 = pd.read_csv(“data3.csv”, sep = “\t”) # 200 x 15

dataset_4 = pd.read_csv(“data4.csv”, sep = “\t”) # 150 x 15

dataset_5 = pd.read_csv(“data5.csv”, sep = “\t”) # 150 x 15

dataset_6 = pd.read_excel("data6.xlsx", engine='openpyxl') # 100 x 15

After reading all the files in different variables, we can combine all of them to have one single variable (in python it is termed as DataFrame)

Merged_data_set = pd.concat( [ dataset_1, dataset_2, . . . , dataset_6 ] )

Finally this merged data set will contain whole data ( 100 + 100 + 200 + 150 + 150 + 100 = 800 rows and 15 columns)

Way 2:

Though the above way of reading multiple files is good, consider when you have 100s of csv files to combine, in that case, it will be very painful to write read statements 100 times.

Python provides a library called as “glob”

It takes the folder name as input and it iterates over all the files which you mention.

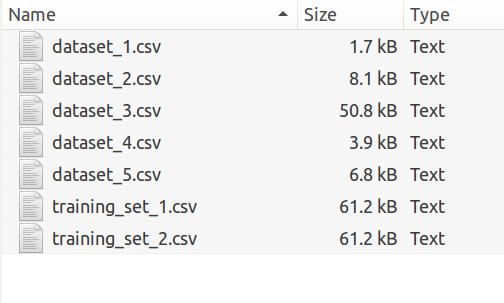

Suppose we want to read all the files which are starting with “dataset_”

Steps:

- Import glob library

- Mention the pattern for files

- Iterate over all the files name using one for loop

- Concatenate every single file with a merged data frame

We collect cookies and may share with 3rd party vendors for analytics, advertising and to enhance your experience. You can read more about our cookie policy by clicking on the 'Learn More' Button. By Clicking 'Accept', you agree to use our cookie technology.

Our Privacy policy can be found by clicking here